Print | posted on Friday, July 27, 2012 2:18 AM

Introduction

SharePoint Server 2013 introduces a new capability called Request Management. Request Management allows SharePoint to understand more about, and control the handling of, incoming requests. Request Management employs a rules based approach, which enables SharePoint to take the appropriate action for a given request based upon administrator supplied configuration. This article series will provide comprehensive coverage of the new Request Management capability in three parts:

- Feature Capability and Architecture Overview (this article)

- Example Scenario and Configuration Step by Step

- Deployment Considerations and Recommendations

UPDATE: This article applies to SharePoint 2013 RTM.

What is Request Management?

As it’s name suggest Request Management helps the farm manage incoming requests by evaluating logic rules against them in order to determine which action to take, and which machine or machines in the farm (if any) should handle the requests.

As you are hopefully aware, a service instance named “SharePoint Foundation Web Application Service” is responsible for handling and responding to incoming requests via IIS. All servers in the farm running this service instance host all Web Applications. These servers are known as “Web Servers” or commonly “WFEs” (a particularly heinous term in SharePoint that should die!).

As each Web Server in the Farm hosts all Web Applications, a load balancer is normally deployed to distribute incoming traffic across them. However there are numerous scenarios where not all Web Servers should be responding to incoming user requests. Examples of such scenarios include dedicated crawl front ends and dedicated administration servers. In such scenarios DNS or load balancer configuration will be leveraged to ensure the right requests go to the right machines. However it is implemented this is a basic form of request routing.

As the size of SharePoint deployments increases and especially in very large scale hosting scenarios, the need for more powerful routing and management of requests becomes extremely important. Being able to inspect requests for various HTTP characteristics (such as the User Agent or Source IP Address) and then choose how to handle them provides the ability to be much smarter about they are handled.

Request Management enables such “advanced” routing and throttling. Example behaviours include:

- Route requests to Web Servers with a good health score, preventing impact on lower health Web Servers

- Prioritise important requests (e.g. end user traffic) by throttling other types of requests (e.g. crawlers)

- Route requests to Web Servers based upon HTTP elements such as host name, or client IP Address

- Route traffic to specific servers based on type (e.g. Search, Client Applications)

- Identify and block harmful requests so the Web Servers never process them

- Route heavy requests to Web Servers with more resources

- Allow for easier troubleshooting by routing to specific machines experiencing problems and/or from particular client computers

It is important to note that Request Management does not replace load balancers or “traffic managers”, and indeed some of the above can be achieved with load balancers. However having the capability “inside” SharePoint is hugely important. It avoids costly and hard to manage load balancer configuration and allows for integration with the rest of the SharePoint stack. As Request Management inherently understands SharePoint, it provides much greater flexibility and helps address some of the most common operational pain points of very large SharePoint deployments.

Before making the choice to deploy Request Management practitioners should take the time to understand the feature capability and determine if it’s the right choice for a given problem space. For some scenarios it may not be necessary at all or there may be a far simpler way to achieve the desired goal(s). This particular topic will be covered in more depth in part three.

Architecture Overview

Request Management is implemented in a service instance named (surprisingly enough) “Request Management”. There is no associated service application. All configuration is performed using Windows PowerShell cmdlets (detailed extensively in part two).

The Request Management service instance is provisioned on every Web Server in the farm. That is, every machine that runs the SharePoint Foundation Web Application Service (SPFWA). This is known as “integrated mode”. Another mode, “dedicated”, is also possible but intentionally will NOT be discussed in this article series.

Request Management is scoped and configured on a per Web Application basis, for each Web Application configured, there is a Request Manager. The Request Manager runs within SPFWA under SPRequestModule.

At a high level there are three logical components of the Request Manager:

- Throttling and Routing

- Throttle request if needed

- Otherwise route request

- Prioritisation

- Determine the correct group of servers to handle the request

- Load Balancing

- Determine the correct single server to handle the request

The Request Manager polls, or pings each server running SPFWA continuously to determine its operational characteristics in order to be able to perform prioritisation and load balancing.

The Request Manager is also the means by which the capability is configured and managed, and has various properties which govern it’s operation such as whether routing is enabled, the ping interval and timeout threshold.

The Request Manager configuration includes five key elements which dictate how rule logic is evaluated and applied before requests are routed and to which servers they are routed.

-

Routing Targets (Servers)

A Routing Target is a machine running SPWAS. By default all servers in the farm running SPWAS are placed in the Routing Targets of the Request Manager. A Routing Target is also known as a Routing Machine.

Each Routing Target has a Static Weighting which is constant and will be evaluated. The Static Weight is assignable, thus the administrator can define “powerful” and “weak” Web Servers. In addition the availability of a Routing Target can be toggled and other “advanced” properties related to the polling of the machine can be set.

Each Routing Target also has a Health Weighting which is dynamic and will be evaluated. The Health Weighting cannot be assigned and is derived from the Health Score (0 to 10) which is updated by Health Analysis.

Routing Targets are associated with a Machine Pool.

-

Machine Pool

A Machine Pool is a collection of Routing Targets which are contained within its MachineTargets property. A Machine pool is the target of one or more Routing Rules.

-

Routing Rules

A Routing Rule is the definition of the criteria to evaluate before routing requests which match the criteria. Each Routing Rule is associated with a Machine Pool and an Execution Group. Routing Rules can have an expiry time set.

- Throttling Rules

A Throttling Rule is the definition of the criteria to evaluate before refusing requests which match the criteria. Throttling Rules are NOT associated with a Machine Pool or an Execution Group. Throttling Rules can have an expiry time set. -

Execution Groups

A Execution Group is a collection of Routing Rules which allows the precedence of rule evaluation to be controlled and Routing Rules to be managed in batches. There are three execution groups (0, 1 & 2) which are evaluated in order. If no Execution Group for a Routing Rule is specified it is associated with Execution Group 0.

Rule Evaluation Flow and Criteria

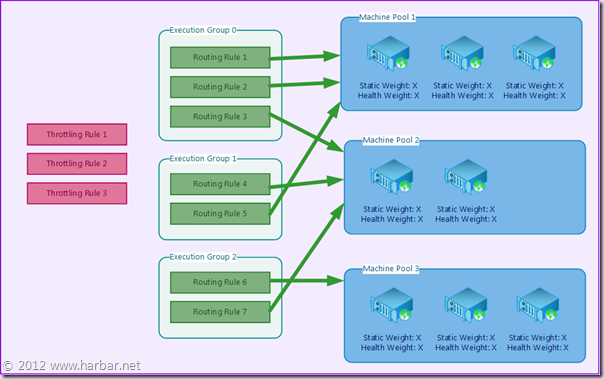

The following diagram presents a configuration scenario which includes the five Request Management elements to help describe the flow of rule evaluation by Request Manager.

First of all Throttling Rules are evaluated. If an incoming request matches a rule criteria the request is refused.

Next the Routing Rules in Execution Group 0 are evaluated. Followed by those in Execution Group 1 and Execution Group 2. Once a incoming request matches a rule criteria, the request is then prioritised and routed to the correct Routing Target (server) within the Machine Pool for the rule is associated.

Once an incoming request matches a rule criteria no further Execution Groups are evaluated. For example, if in the above scenario an incoming request matches the criteria for Routing Rule 4, then Execution Group 2 and it’s rules (6 & 7) are NOT evaluated. This means that careful rules planning is paramount and the most important rules should be placed in Execution Group 0 to ensure they are evaluated.

The Routing Targets from Machine Pools where there are rule matches are combined. These are then the Routing Targets passed to the load balancer to determine the correct individual Routing Target.

If no incoming requests match a rule criteria then the request is routed to all available Routing Targets.

Throttling and Routing Rules can match the following properties:

- CustomHeader

- Host

- HttpMethod

- IP

- SoapAction

- Url

- UrlReferrer

- UserAgent

Most of these will be familiar to you as standard HTTP Request Headers. The CustomHeader property allows ultimate flexibility, especially when working with CSOM based applications or external load balancers and other network “traffic managers”.

The Match Types available for each of the above properties are:

- EndsWith

- Equals

- Regex

- StartsWith

Again fairly straightforward. However care should always be taken with Regular Expressions. A poor regex, or worse one used when an alternative match type would work can negatively impact the performance of the Request Manager and therefore the end user response times.

Simple Scenarios

With the component and configuration properties covered, let’s take a look at a couple of canonical examples of how we can leverage Request Management.

- Rich Client Application interaction causes performance to decrease for Web browser requests.

Under heavy browse load a number of clients start loading large Excel workbooks from the Excel client. A throttling rule is added to refuse such requests from Excel based upon the UserAgent HTTP Header. - One of Five Web Servers is experiencing performance problems.

Request Management routes the majority of incoming requests to the four healthy servers, whilst the performance problems are being remediated on the sick server.

Relationship with other techniques

What about SharePoint Throttling?

SharePoint 2010 introduced throttling based upon a health score. However that capability leaves a lot to be desired especially in large scale scenarios. Fundamentally throttling alone cannot deal with a Web server failure either at the machine or IIS level. Furthermore it is up to the client application to be a good citizen and respect the health score, which requires implementation in the client application. This is a massive deal as we move towards a cloud of CSOM based solutions.

But my load balancer, traffic manager or network optimizer from [insert switch vendor name here] can do that stuff.

Sure, many can. But none are integrated into the SharePoint pipeline. Furthermore each vendor’s implementation approach is different, sometimes even different between modules or products from the same vendor. This makes the overall manageability of the solution complex, and complexity is our number one enemy. Pipeline integration allows us to activate throttling and routing directly based upon characteristics of the farm or server(s) – something no third party switch can do without additional round trips. We also need to be in a position to change our switch without having to worry about migrating such functionality.

Does this mean I don’t need a load balancer?

Probably not. In most cases you will still need a classic load balancer between your clients and the SharePoint farm. Only in very small scale deployments would Request Management be suitable as a replacement for a classic load balancer, especially given there is one for free with Windows Server.

All sounds like a rip off of Application Request Routing (AAR) in Internet Information Services.

Indeed Request Management shares many concepts and similarities with AAR. However support for such IIS add-ons with SharePoint is problematic at best. AAR has already been through a number of incarnations one of which was dreadful in terms of performance. SharePoint cannot afford to be beholden to such an add-on, it must be a “standalone” independent pipeline. In the same way that we should not use IIS Shared Configuration in a SharePoint farm, we must be extremely cautious with things like AAR. Also as with external devices, the lack of pipeline integration restricts our operational service management flexibility.

Conclusion

Request Management allows SharePoint to understand more about, and control the handling of, incoming requests. Request Management employs a rules based approach, which allows SharePoint to take the appropriate action for a given request. This article covered the broad feature capability and provided an architecture overview of Request Management.

Stay tuned for the next part, which will present an example scenario and provide a step by step of the necessary configuration.

.